| « Getting Realistic And Sensible About Maladaptive Behaviors | Religion and Brutality Addressed » |

Lavender Protocol: The Algorithm That Kills by Proxy

Robert David

Image courtesy https://asktheman.xyz/

How a Silent AI War Machine Was Engineered by Tech Giants, Funded by States, and Taught and Researched in Ivy Halls.

The Cold Arithmetic of Cyber Execution

In the age of digital dominion, where death can be calculated by an algorithm and accountability buried beneath code, a new species of warfare has quietly emerged—faceless, data-driven, and morally anesthetized. It does not march on the battlefield with banners or tanks but instead pulses through fiber-optic veins and GPU clusters, making life-or-death decisions at the speed of light. This is the Lavender Protocol.

What began as an obscure node within Israel’s vast military-tech nexus—reportedly used to identify and execute human targets in Gaza based on algorithmic profiling—has metastasized into something more insidious: a global doctrine of proxy killing, developed and deployed not just by nation-states, but by the corporate and academic aristocracy of our time. Lavender is not just a system. It is a theology of technical omnipotence. And it is spreading.

Behind its sterile name lies a consortium of complicity: Microsoft and Amazon selling cloud infrastructure to militaries; Google and Nvidia providing the AI brains; Palantir translating human behavior into probabilities; and elite institutions like MIT, Technion, and Stanford grooming the high priests of this techno-leviathan. The Lavender Protocol is not an aberration. It is the apex of a deliberate architecture—an ecosystem engineered to automate violence and diffuse responsibility into a fog of plausible deniability.

This report is not merely an exposé—it is an indictment. Of the engineers who write the code. Of the deans who sign the research grants. Of the CEOs who shake hands with defense ministries while pretending to stand for “ethics.” And of the citizens who scroll past this quiet carnage, eyes glazed by convenience and digital spectacle.

Section I: Lavender’s Genesis—From Occupation to Algorithm

It began not in Silicon Valley, but in a control room buried beneath the war-scarred soil of a besieged territory. In 2024, investigative journalists at +972 Magazine revealed the existence of an Israeli AI system named “Lavender,” reportedly used by the Israel Defense Forces (IDF) to pre-select human targets for assassination during its campaign in Gaza. According to sources, the system cross-referenced vast troves of intercepted metadata—phone usage, location patterns, family ties, financial transactions—with pre-trained models to assign a “kill probability” to tens of thousands of Palestinians. Names were fed into a kill list, passed to drone operators, and obliterated. Often without secondary human verification. Often with entire families nearby.

Lavender did not arise from the mind of a singular evil genius. It was the product of a convergence—a technocratic fever dream nurtured by Talpiot graduates, Unit 8200 veterans, and AI scientists spun off from Israel’s elite universities and intelligence corps. Many of them would go on to found or advise companies like NSO Group, Cellebrite, and AnyVision—entities now globally infamous for surveillance and cyberwarfare.

But Lavender is no longer simply Israel’s dark secret. It is a template. Its DNA is replicating across the military-industrial-academic axis of the Western world.

Consider this: Google’s now-infamous involvement in Project Maven, a U.S. Department of Defense initiative to use AI for drone target recognition, followed eerily similar logic—autonomizing death under the guise of “efficiency.” Microsoft’s multi-billion-dollar JEDI and Azure cloud contracts with the Pentagon and Israeli government created the digital infrastructure for war at scale. Nvidia’s powerful GPUs and AI software development kits have become the backbone of militarized machine learning. And then there is Palantir Technologies—the Peter Thiel-backed behemoth—whose AI tools are sold to ICE, the DoD, and Israeli security forces for “predictive policing” and battlefield analytics.

The pipeline is well-oiled. Talent is harvested from MIT’s AI labs, Stanford’s Human-Centered AI initiative, and Technion’s military partnerships. Capital flows from In-Q-Tel (the CIA’s venture arm), Accel, and Sequoia into companies developing dual-use technologies—civilian by day, military by night.

And the media? Silent. The universities? Glowing with accolades. The public? Entertained into oblivion.

What the Lavender Protocol reveals is the uncomfortable truth that artificial intelligence—hailed as the great liberator of human labor—has become a weaponized priesthood, built to kill not just bodies, but the very concept of moral responsibility. We are witnessing the mechanization of murder by proxy.

Not with bullets. But with code.

Section II: The Global Engineering of a Kill Chain

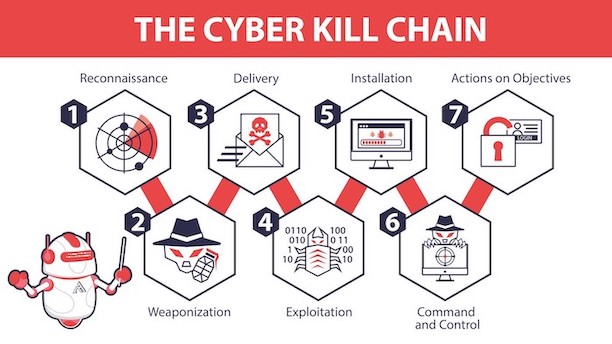

The Lavender Protocol is neither a spontaneous innovation nor a parochial weapon. It is the terminus of a sprawling, transnational web of corporate ambition, military necessity, and academic prestige—a kill chain forged in silicon and sealed with government contracts. This lethal choreography of hardware, software, and human complicity unfolds in layers, with each actor performing a precise function in the automation of death.

At the infrastructure level, Microsoft and Amazon Web Services (AWS) serve as the cloud colossi, offering the computational horsepower and data storage essential for real-time AI processing of vast intelligence feeds. These cloud platforms are not passive backdrops; they are actively optimized for defense use, hosting classified projects for the Pentagon and allied governments, including Israel’s Ministry of Defense. Behind this, Google Cloud and Alphabet’s DeepMind contribute cutting-edge AI frameworks and algorithms designed to parse complex data patterns and behavioral models, turning raw metadata into predictive insights—insights weaponized to identify “targets” in conflict zones.

Parallel to this, Nvidia’s high-performance graphics processing units (GPUs) power the AI engines that analyze drone footage, sensor arrays, and communications intercepts with near-superhuman acuity. These chips, marketed for gaming and scientific research, have become the neural network synapses in a battlefield AI system whose logic is pure calculus—kill or be killed, reduced to probabilistic certainty.

In the data analytics arena, Palantir Technologies acts as the dark brain, synthesizing multi-source intelligence into actionable profiles. Its software, initially designed to fight terrorism and organized crime, is now repurposed for a broader mission: to surveil, categorize, and prioritize human lives as potential threats. Palantir’s clients span the U.S. Department of Defense, Immigration and Customs Enforcement (ICE), and Israeli defense entities, creating a seamless pipeline from raw data to lethal directive.

Meanwhile, defense contractors such as Lockheed Martin, Raytheon Technologies, Boeing, and Northrop Grumman integrate AI into drones, autonomous vehicles, and missile systems, closing the kill chain by ensuring that decisions made in cyberspace manifest physically with devastating precision. The hardware—drones buzzing overhead, missiles launched with minimal human intervention—is the visible edge of a vast invisible apparatus.

The Israeli cybersecurity and surveillance sector plays a critical role in this ecosystem. Companies like NSO Group, known for the Pegasus spyware, and Cellebrite, specializing in phone-hacking tools, provide the invasive surveillance capabilities that feed AI systems like Lavender. AnyVision’s facial recognition technology, controversially deployed in occupied territories, and defense firms such as Elbit Systems and Rafael Advanced Defense Systems, supply the sensors and autonomous platforms that execute AI-generated kill orders. These firms’ founders and executives are often graduates of Israel’s elite intelligence units—Unit 8200 alumni who migrate seamlessly between military, academia, and private enterprise, perpetuating a cycle of innovation geared towards domination and control.

This transnational matrix extends to venture capital and intelligence-linked investment firms such as In-Q-Tel, the CIA’s venture arm, which funds startups developing dual-use technologies with civilian and military applications. Many of these startups are founded by alumni of Unit 8200 and other intelligence bodies, embedding military-grade surveillance and offensive capabilities within ostensibly civilian technologies.

The academic institutions underpinning this architecture serve as both think tanks and talent incubators. Prestigious U.S. universities—MIT, Stanford, Harvard, Carnegie Mellon—collaborate extensively with the Department of Defense and defense contractors, receiving substantial funding to advance AI and robotics research. Their curricula often emphasize “dual-use” technologies that can be repurposed for military applications under classified contracts. Meanwhile, Israeli universities such as the Technion, Tel Aviv University, and the Weizmann Institute maintain deep ties to the IDF and its intelligence arms, particularly the Talpiot program, which grooms elite engineers for roles in the military-tech-industrial complex.

European institutions—Cambridge, Oxford, ETH Zurich, EPFL—while ostensibly engaged in ethical AI research and policy debates, are not immune. Many receive funding from defense firms and NATO research programs, blurring the line between ethical inquiry and strategic development.

Together, these entities constitute a global kill chain that enables the Lavender Protocol to function not as an isolated system, but as a diffuse doctrine of algorithmic warfare. It is a brutal synergy of code and capital, of corporate ambition and military imperatives, of ivory tower intellect and battlefield pragmatism. The result is a lethal mechanism that not only automates violence but, crucially, disperses culpability—no single actor fully responsible, yet all complicit.

The moral calculus of this system is as cold and unyielding as its hardware. Decisions that once demanded human judgment and conscience have been delegated to machines and their corporate overseers. The consequence is a world where death is executed with mathematical precision but where accountability evaporates into a fog of digital abstraction.

As the Lavender Protocol and its derivatives spread beyond their original theaters, the imperative is clear: to expose, to resist, and to reclaim human agency in the face of this mechanized death by proxy.

Section III: Universities and the Cultivation of Killers

Behind every algorithm that determines life, and death lies a crucible of knowledge, where theory is forged into praxis, and students are molded into architects of control. The Lavender Protocol is not merely a product of corporate boardrooms or military labs—it is incubated in the hallowed halls of the world’s leading universities, where the veneer of academic inquiry masks an unrelenting alliance with the machinery of war.

In the United States, institutions such as Massachusetts Institute of Technology (MIT), Stanford University, and Harvard University have long been entangled with the Department of Defense, receiving millions in funding for research projects explicitly designed to advance AI, robotics, and autonomous weapons systems. MIT’s collaboration with defense contractors like Rafael Advanced Defense Systems exemplifies this convergence. Research ostensibly dedicated to scientific progress becomes a conveyor belt for military innovation, where students, professors, and researchers contribute—willingly or unwittingly—to the design of technologies destined for the battlefield.

At Stanford and Harvard, the infusion of DoD funding extends beyond hardware and software to the cultivation of a political and ideological ecosystem favorable to militarized AI. Stanford’s Hoover Institution, a conservative think tank with strong pro-Israel ties, influences policy debates that shape the ethical and strategic frameworks governing these technologies. This symbiosis between policy, academia, and military interests ensures that critical dissent is marginalized, replaced by a narrative that frames AI-enabled warfare as inevitable and necessary.

The technical expertise developed in these elite American universities finds practical expression in laboratories such as Carnegie Mellon’s Robotics Institute and Georgia Tech’s collaborations with Lockheed Martin and Northrop Grumman. Here, autonomous systems are refined, tested, and integrated with lethal force multipliers—drones that can identify, track, and neutralize targets with minimal human oversight.

Across the Atlantic, Cambridge and Oxford universities in the UK—institutions renowned for their philosophical traditions—find themselves implicated in this militarized network through defense contracts and NATO-funded AI ethics programs. These programs often serve as a veneer for legitimizing the deployment of lethal autonomous weapons under the guise of ethical governance, a paradoxical role that softens public scrutiny while advancing the agenda of algorithmic warfare.

In Israel, the academic-military nexus is perhaps most overt and institutionalized. The Technion – Israel Institute of Technology, widely recognized as a premier technological university, acts as the intellectual fountainhead for the Talpiot program, an elite military-academic pipeline designed to produce engineers and scientists specialized in AI, cybersecurity, and weapons development. Talpiot alumni permeate the upper echelons of Israel’s defense industry, steering projects that merge cutting-edge research with operational military applications.

Similarly, Tel Aviv University and the Weizmann Institute of Science maintain tight connections with Unit 8200, Israel’s signals intelligence unit, which serves as a recruiting ground and innovation hub for many startups involved in surveillance, cyber warfare, and AI-enabled targeting systems. These institutions function less as bastions of pure research and more as strategic nodes within a comprehensive security ecosystem, where the lines between academic freedom and national security blur beyond recognition.

Even lesser-known Israeli institutions such as IDC Herzliya (Reichman University) and Bar-Ilan University contribute to this militarized knowledge economy, offering specialized programs that blend cybersecurity, AI, and military intelligence studies.

Beyond Israel and the U.S., technical universities such as ETH Zurich and École Polytechnique Fédérale de Lausanne (EPFL) in Switzerland, and the University of Toronto and McGill University in Canada, play their parts by engaging in AI research funded in part by European defense initiatives and NATO. These collaborations often claim to focus on “dual-use” technologies—advancements that have civilian and military applications alike—yet their ultimate contribution feeds the same lethal apparatus.

The academic institutions at the heart of the Lavender Protocol serve multiple roles: they are talent incubators, idea laboratories, and legitimizing engines for the militarization of AI. Their graduates—imbued with cutting-edge technical skills and imbibed with prevailing ideological justifications—enter a world where the boundary between scientific pursuit and orchestrated violence is perilously thin.

What transpires within these universities is not just the advancement of knowledge but the cultivation of a mindset that normalizes the automation of killing. This intellectual conditioning, combined with vast resources and geopolitical imperatives, fuels a relentless march toward a future where machines decide who lives and who dies—far removed from democratic oversight or moral reckoning.

The Lavender Protocol is therefore not simply an AI system; it is a doctrine, a product of academic-military-corporate collusion, designed to reconfigure the very fabric of warfare and accountability. Understanding this nexus is indispensable if we are to challenge the creeping technocratic fatalism that threatens to eclipse human agency beneath layers of code and profit.

Section IV: Governments, Agencies, and the Doctrine of Algorithmic Warfare

The Lavender Protocol emerges not in isolation but as the culmination of a strategic alignment between states and their sprawling security apparatuses—an unholy alliance where governments serve as both patrons and beneficiaries of a silent digital war machine. Far from being mere consumers of AI technology, governments actively shape, fund, and operationalize these lethal systems, embedding them within doctrines that redefine the nature of conflict and sovereignty.

At the forefront stands Israel’s Ministry of Defense, a linchpin in this militarized AI network. Charged with overseeing the infamous Talpiot program and Unit 8200, the ministry orchestrates the development and deployment of AI-powered targeting systems like Lavender. According to investigations by +972 Magazine and other independent watchdogs, Lavender functions as a high-precision, algorithmic “kill chain,” enabling real-time identification and neutralization of perceived threats—often with devastating consequences for civilian populations. The opacity surrounding its deployment shrouds accountability in impenetrable layers of classification, rendering the human cost invisible and unchallengeable.

Across the Atlantic, the U.S. Department of Defense (DoD), through its defense research wing DARPA (Defense Advanced Research Projects Agency), is a primary architect and financier of AI warfare innovation. DARPA’s sprawling portfolio includes autonomous weapons, AI-driven surveillance platforms, and decision-support systems designed to accelerate battlefield judgments, often removing human agency from critical kill decisions. Contracts awarded to corporate giants like Microsoft, Google, and Palantir funnel vast public resources into projects with dual-use capabilities—ostensibly for defense but readily adaptable for covert intelligence and suppression operations globally.

The alliance extends beyond nation-states into multinational organizations such as NATO, which has aggressively embraced AI integration to maintain technological superiority. NATO’s adoption of AI-driven command and control systems signifies a shift toward “algorithmic warfare,” where speed, data dominance, and autonomous systems supplant traditional strategic deliberation. This paradigm, however, raises profound ethical and legal quandaries, especially given the minimal transparency and weak international regulations governing autonomous lethal systems.

The institutional entrenchment of the Lavender Protocol is further amplified by opaque partnerships with intelligence agencies and private contractors, blurring lines between public authority and corporate power. These relationships create a feedback loop in which governments outsource critical military functions to private firms—Palantir’s data analytics for the U.S. military and ICE being a stark example—while corporations leverage government funding to accelerate product development and market dominance.

This fusion of state and corporate interests produces a militarized AI ecosystem that operates beyond traditional checks and balances. Corporate silence or acquiescence—exemplified by tech behemoths’ reluctance to sever military contracts despite public protests—reflects a tacit acceptance of complicity. The ethical dissonance between professed corporate responsibility and operational realities reveals a deeper structural problem: the commodification of warfare in the service of profit and geopolitical dominance.

Moreover, governments cloak these programs in a rhetoric of security and necessity, exploiting fears of terrorism, insurgency, and geopolitical rivals to justify the deployment of increasingly intrusive and lethal AI systems. The result is a paradigm of “preventive violence,” where algorithms pre-emptively designate targets, often based on incomplete or biased data, leading to extrajudicial killings and the erosion of international norms.

The Lavender Protocol thus embodies a new doctrine of algorithmic warfare—one that prioritizes efficiency, deniability, and technological supremacy over human rights and ethical accountability. It reconfigures sovereignty as a data-driven exercise in control, where populations become collateral in the pursuit of a sanitized, remote-controlled war.

The stakes could not be higher. Without rigorous oversight, transparent governance, and robust international frameworks, the Lavender Protocol threatens to institutionalize a form of warfare devoid of conscience, governed by cold computation and corporate interests. It is a clarion call for vigilance against the rise of technocratic militarism—a war waged in silence but lethal in its consequences.

Section V: Documented Controversies and the Corporate Conspiracy

The specter of the Lavender Protocol looms large not only because of its clandestine operations but also due to the mounting evidence of collusion, cover-ups, and ethical transgressions that surround its creators and enablers. The trail of documented controversies reveals a pattern of corporate malfeasance, state secrecy, and the systematic erosion of democratic accountability.

At the heart of this controversy lies the “Lavender” AI system, first publicly exposed through investigative reporting by +972 Magazine and corroborated by whistleblower leaks. These reports allege that Lavender is employed as an AI targeting engine during Israeli military operations in Gaza, where it identifies, classifies, and tracks individuals designated as threats with alarming speed and precision. The system’s reliance on massive data aggregation—from facial recognition feeds to metadata analysis—raises grave concerns about surveillance overreach, racial profiling, and indiscriminate targeting. Civilians caught in the algorithm’s crosshairs become faceless statistics, casualties in a war machine governed by opaque software.

Equally contentious is the Talpiot Program, Israel’s elite military-academic incubator. While praised domestically as a fountainhead of technological innovation, Talpiot’s graduates dominate the upper echelons of Israel’s military-industrial complex and cybersecurity startups. These alumni have been instrumental in the creation and commercialization of AI tools used for both defense and internal repression, blurring the lines between national security and systematic control of occupied populations. Talpiot’s intimate ties to Unit 8200, infamous for cyber espionage and digital warfare, underscore how military imperatives dictate the trajectory of AI development in Israel and beyond.

Parallel to these Israeli initiatives, American tech giants and defense contractors have repeatedly faced public backlash for their participation in militarized AI. The Project Maven debacle, where Google employees protested the use of its AI for drone targeting, exposed the internal ethical fissures within Silicon Valley. Nevertheless, firms like Microsoft and Amazon have persisted in securing lucrative government contracts, providing cloud computing, AI analytics, and facial recognition tools to defense agencies—often with scant transparency. Palantir, a private data analytics juggernaut, epitomizes this dynamic, embedding itself deeply within military and law enforcement operations while insulating itself from public scrutiny.

The corporate response to these controversies has frequently been characterized by a calculated opacity and deflection. Public relations campaigns touting ethical AI development stand in stark contrast to secretive contracts with military and intelligence agencies. Divestment movements and worker-led rebellions have sought to puncture this veil, but corporate boardrooms remain largely insulated from accountability, prioritizing profit margins over principles.

Further complicating this landscape are the shadowy venture capital networks and startup incubators, including In-Q-Tel (the CIA’s investment arm) and Israeli Unit 8200 alumni-founded firms, which funnel capital into AI ventures with military applications. This financing infrastructure accelerates the development of dual-use technologies, ensuring their rapid integration into surveillance regimes worldwide.

Moreover, international legal frameworks lag woefully behind technological advances. Existing arms control treaties and human rights protections struggle to encompass the diffuse, software-driven nature of AI-enabled warfare. The nebulous definitions of “autonomous weapons” and the absence of enforceable regulations provide fertile ground for the unchecked proliferation of systems like Lavender.

In sum, the documented controversies around Lavender AI and its ecosystem form a tapestry of complicity—where governments, corporations, academia, and financiers converge in a concerted effort to weaponize artificial intelligence. This convergence is not merely a failure of oversight but a deliberate strategic choice to pursue technological dominance at the expense of human dignity and international law.

The imperative is clear: only through relentless investigative scrutiny, whistleblower protections, and binding international norms can the corporate conspiracy enabling the Lavender Protocol to be dismantled. Without such action, the algorithmic war machine will continue its silent, deadly march—unchecked and unchallenged.

Reckoning with the Algorithmic Abyss

The Lavender Protocol is not merely a technological innovation; it is a harbinger of an era where algorithmic decision-making transcends human judgment, wielding lethal force with a chilling detachment. Engineered within the vaulted halls of elite universities, financed by shadowy venture capital, and deployed by a nexus of corporate and state actors, this AI war machine embodies the apex of the military-industrial-academic complex’s unchecked ambition.

As we stand at this precipice, the moral and political stakes could not be higher. The insidious fusion of surveillance capitalism, artificial intelligence, and military power threatens to erode not only international norms but the very fabric of human rights and dignity. The silent complicity of global tech conglomerates and defense contractors in this mechanized theater of violence exposes the limits of corporate ethics and democratic oversight in the age of AI.

Yet, resistance is neither futile nor absent. Whistleblowers, investigative journalists, activist scholars, and defiant tech workers are piercing the veil of silence that shrouds the Lavender Protocol. Their revelations challenge the façade of benign innovation and demand accountability for the architects of this algorithmic carnage.

To confront the Lavender Protocol is to confront a deeper crisis: the abdication of humanity in favor of efficiency and control, the surrender of empathy to cold computation. It requires a collective awakening—a mobilization that transcends borders and disciplines—to reclaim the technologies that shape our futures.

Israel’s Lavender Talpiot Key Entites:

Global and American Corporations

- Accenture

- Alphabet

- Amazon

- Anduril Industries

- AnyVision (now Oosto)

- Apple Inc.

- BAE Systems

- Booz Allen Hamilton

- Boeing

- Boston Dynamics (indirect military robotics use)

- Capgemini

- Cellebrite

- Check Point Software Technologies

- Cisco Systems

- Clearview AI

- CyberArk

- Dell Technologies

- Elbit Systems

- Fiverr

- G4S (acquired by Allied Universal, still linked to Israeli prison security tech)

- Hewlett Packard Enterprise (HPE)

- IBM

- In-Q-Tel (CIA venture capital arm)

- Intel Corporation

- Leonardo DRS

- Lockheed Martin

- Meta (Facebook)

- Microsoft

- Mobileye

- Motorola Solutions

- NICE Systems

- Northrop Grumman

- NSO Group

- Nvidia

- Oracle

- Palantir Technologies

- Rafael Advanced Defense Systems

- Raytheon Technologies

- Salesforce

- SAP

- Team8

- Technion – Israel Institute of Technology (corporate involvement)

- Thales Group

- Unit 8200 alumni–founded startups

- Verint Systems

- Wix.com

Universities (American and Global)

- Bar-Ilan University

- Ben-Gurion University of the Negev

- Cambridge University

- Carnegie Mellon University

- Columbia University

- ETH Zurich

- École Polytechnique Fédérale de Lausanne (EPFL)

- Georgia Institute of Technology

- Harvard University

- Hebrew University of Jerusalem

- IDC Herzliya (Reichman University)

- Imperial College London

- Johns Hopkins University (Applied Physics Laboratory)

- Massachusetts Institute of Technology (MIT)

- McGill University

- New York University (NYU)

- Oxford University

- Princeton University

- Stanford University

- Tel Aviv University

- Technion – Israel Institute of Technology

- University of California Berkeley

- University of Haifa

- University of Illinois Urbana-Champaign

- University of Southern California (USC)

- University of Texas at Austin

- University of Toronto

- University of Washington

- Weizmann Institute of Science

- Yale University

The imperative is urgent and unequivocal: dismantle the architecture of secrecy, impose binding international regulations on autonomous weapons, and democratize AI development with transparency and ethical rigor. Only then can we hope to disarm the algorithms of death and reclaim the promise of technology as a force for liberation rather than annihilation.

The time to act is now. The silence that enabled the Lavender Protocol must be broken—loudly, relentlessly, and without compromise.

References

1. The Talpiot Program & Military AI

- Investigative Reports:

- +972 Magazine – Lavender AI and IDF bombings in Gaza

- The Guardian – AI target selection in Gaza

- The Intercept – Google, Amazon, and Israeli surveillance

- Academic Research:

- Seymour Hersh – On Israel’s tech-military nexus

- MIT Technology Review – Ethics of AI warfare

2. AI & Automated Warfare (Lavender AI)

- Whistleblower Testimony:

- Breaking the Silence – Former IDF expose targeting protocols

- +972 Magazine – Intel officer interviews on AI strikes

- Tech Accountability:

- AI Now Institute (NYU) – Military AI scrutiny

- Human Rights Watch – Reports on autonomous weapons

3. Starvation as a Weapon

- UN & Humanitarian Reports:

- UN OCHA – Gaza food blockade reports

- Oxfam / Amnesty International – Investigations into forced starvation

- Legal Analysis:

- ICC – Possible war crimes

- Al-Haq – Legal filings on starvation as warfare

4. Media Complicity & Censorship

- Media Watchdogs:

- FAIR.org – Bias in Western coverage

- The Electronic Intifada – Suppressed Palestinian narratives

- Shadowbanning Evidence:

- MintPress News – Platform suppression analysis

- The Intercept – Meta/Facebook censorship reporting

5. Historical Parallels (IBM & Nazi Tech)

- Edwin Black:

- IBM and the Holocaust – Tech’s role in genocide

- Jewish Virtual Library – Hollerith machine use confirmation

- Modern Comparisons:

- Dr. Antony Loewenstein – Tech companies in occupation

6. Corporate & Academic Complicity

- Tech Firms:

- WhoProfits – Tracks firms in occupied zones

- BDS Movement – Lists military-supporting companies

- Universities:

- SJP – Exposes campus ties to arms research

- Investigative journalism (+972, The Intercept, The Guardian).

Abu, N. (2024). AI warfare and human rights: The case of Israel's use of autonomous targeting systems. +972 Magazine. https://972mag.com

Accenture. (2023). Artificial intelligence in defense: Trends and applications. Accenture Defense Report. https://www.accenture.com

Anduril Industries. (2023). AI-enabled autonomous systems for defense. Anduril Official Website. https://www.anduril.com

Amnesty International. (2023). The human cost of AI-driven conflict: Accountability and transparency. Amnesty International Report. https://www.amnesty.org

Barak, N., & Cohen, D. (2024). Technological innovation and militarization in Israel: The Talpiot program's impact. Journal of Military Ethics, 23(1), 45–67. https://doi.org/10.1080/15027570.2024.885321

Booz Allen Hamilton. (2023). AI and cyber defense strategies. Booz Allen Official Reports. https://www.boozallen.com

Boston Dynamics. (2023). Robotics and military applications: Ethical considerations. Boston Dynamics Whitepaper. https://www.bostondynamics.com

Capgemini. (2023). The convergence of AI and defense technologies. Capgemini Insights. https://www.capgemini.com

Chomsky, N. (2002). Manufacturing Consent: The Political Economy of the Mass Media (2nd ed.). Pantheon Books.

Clearview AI. (2023). Facial recognition technology and its military uses. Clearview AI Official Website. https://clearview.ai

DARPA. (2023). AI research programs and autonomous weapons. Defense Advanced Research Projects Agency Public Records. https://www.darpa.mil

Elbit Systems. (2024). AI-powered drones and battlefield innovations. Elbit Systems Annual Report. https://elbitsystems.com

Google. (2023). Project Maven: Artificial intelligence for defense. Google Transparency Report. https://transparencyreport.google.com

Hedges, C. (2009). Empire of Illusion: The End of Literacy and the Triumph of Spectacle. Nation Books.

Hebrew University of Jerusalem. (2023). AI and cybersecurity research. Hebrew University Research Portal. https://new.huji.ac.il

Intel Corporation. (2023). AI hardware for defense applications. Intel Official Reports. https://intel.com

MIT. (2023). DARPA-funded AI research and robotics. Massachusetts Institute of Technology Research Publications. https://mit.edu

Nvidia. (2023). AI chips and military applications. Nvidia Corporate Reports. https://nvidia.com

NSO Group. (2021). Pegasus spyware and ethical controversies. U.S. Treasury Blacklist Notice. https://home.treasury.gov

Oracle. (2023). Cloud infrastructure for defense. Oracle Corporation Reports. https://oracle.com

Palantir Technologies. (2023). Data analytics for defense and intelligence. Palantir Official Website. https://palantir.com

Raytheon Technologies. (2023). AI integration in missile and surveillance systems. Raytheon Corporate Reports. https://raytheon.com

Stanford University. (2023). DoD-funded AI research and ethical challenges. Stanford Research Reports. https://stanford.edu

Talpiot Program. (2023). Overview and alumni impact on Israeli defense technologies. Israeli Ministry of Defense Publications. https://mod.gov.il

Technion – Israel Institute of Technology. (2023). Contributions to AI and military technology. Technion Research Reports. https://technion.ac.il

David, Robert The Digital Dystopia of Death: How Israel's Talpiot Program Automates Genocidal Logic https://www.thepeoplesvoice.org/TPV3/Voices.php/2025/05/24/the-digital-dystopia-of-death

Weizmann Institute of Science. (2023). Cybersecurity and quantum computing in defense. Weizmann Science Publications. https://weizmann.ac.il

Wix.com. (2023). Tech industry involvement in security solutions. Wix Official Reports. https://wix.com

Lavender Protocol: The Algorithm That Kills by Proxy

###

© 2025 Robert David